Saturday, 29 March 2025

Techniques for suppressing health experts' social media accounts, part 1 - The Science™ versus key opinion leaders challenging the COVID-19 narrative

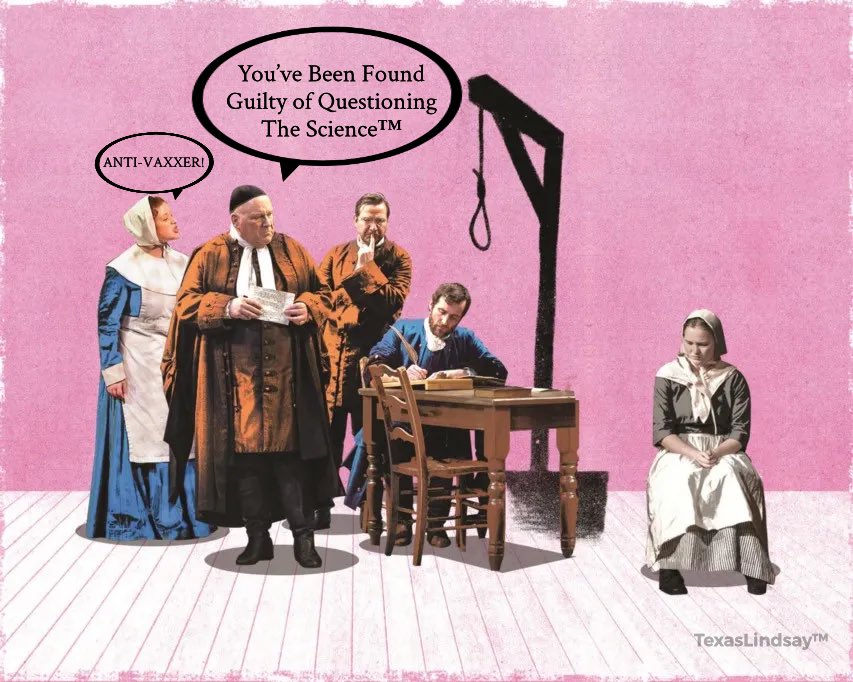

There has been extensive censorship of legitimate, expert criticism during the COVID-19 event (Kheriaty, 2022. Shir-Raz et al, 2023. Hughes, 2024). Such scientific suppression makes the narrow frame visible for what the sponsors of global health authoritarianism permit for questioning of The Science™. In contrast to genuine science which innovates through critique, incorporated science does not welcome questioning. Like fascism, corporatist science views critiques of its interventions to be heresy. In the COVID-19 event, key opinion leaders who criticised the lack of scientific rigour behind public health measures (such as genetic vaccine mandates) were treated as heretics by a contemporary version of the Inquisition (Malone et al., 2024). Dissidents were accused of sharing "MDM" (Misinformation, Disinformation and Malinformation) assumed to place the public's lives at risk. Particularly in prestigious medical universities, questioning the dictates of health authorities and their powerful sponsors was viewed as being unacceptable, completely outside an Overton Window that had become far more restrictive due to fear- mongering around a "pandemic" (see Figure 1).

Higher Education is particularly susceptible to this groupthink, as it lends itself to a purity spiral, which in turn contributes to the growing spiral of silence for "unacceptable views". A purity spiral is a form of groupthink in which it is more beneficial to hold some views than to not hold them. In a process of moral outbidding, individual academics with more extreme views are rewarded. This was evidenced at universities where genetic vaccine proponents loudly supported the mandatory vaccination of students, despite them having minimal, if any, risk. In contrast, scholars expressing moderation, doubt or nuance faced ostracism as "anti-vaxxers". In universities, there are strong social conformity factors within its tight-knit community. Grants, career-support and other forms of institutional support depend on collegiality and alignment with prevailing norms. Being labeled a contrarian for questioning a ‘sacred cow’, such as "safe and effective" genetic vaccines, is likely to jeopardise one’s reputation, and academic future. Academic disciplines coalesce around shared paradigms and axiomatic truths, routinely amplifying groupthink. Challenging reified understandings as shibboleths can lead to exclusion from conferences, journals and cost scholars departmental, faculty, and even university support. Particularly where powerful funders object to such dissent!

Here, administrative orthodoxy can signal an “official” position for the university that chills debate. Dissenters fears of isolation and reprisal (such as poor evaluations and formal complaints for not following the official line) may convince them to self-censor. Particularly where the nonconformist assesses that the strength of opinion against his or her opinion is virulent, alongside high costs to expressing a disagreeable viewpoint- such as negotiating cancelation culture. Individuals who calculate that they have a low chance of success to convince others, and are likely to pay a steep price, self censor and contribute to the growing spiral of silence. The COVID-19 event serves as an excellent example for this growing spiral’s chilling effect versus free speech and independent enquiry.

COVID-19 is highly pertinent for critiquing censorship in the Medical and Health Sciences. Particularly as it featured conflicts of interest that contributed to global health "authorities" policy guidance. Notably, the World Health Organisation promoted poorly substantiated and even unscientific guidelines (Noakes et al., 2021), that merit being considered MDM. In following such dictates from the top policy makers of the Global Public-Private Partnership (GPPP or G3P), most governments' health authorities seemed to ignore key facts. Notably: i. COVID-19 risk was steeply age-stratified (Verity et al, 2019. Ho et al, 2020. Bergman et al, 2021); ii. Prior COVID-19 infection can provide substantial immunity (Nattrass et al., 2021); iii. COVID-19 genetic vaccines did not stop disease transmission (Eyre et al. 2022, Wilder-Smith, 2022); iv. mass-masking was ineffective (Jefferson et al., 2023. Halperin, 2024); v. school closures were unwarranted (Wu et al., 2021); and, vi. there were better alternatives to lengthy, whole-society lockdowns (Coccia, 2021, Gandhi and Venkatesh, 2021. Herby et al., 2024). Both international policy makers' and local health authorities' flawed guidance must be open debate and rigorous critique. If public health interventions had been adapted to such key facts during the COVID-19 event, the resultant revised guidance could well have contributed to better social-, health-, and economic outcomes for billions of people!

This post focuses on six types of suppression techniques that were used against dissenting accounts whose voices are deemed illegitimate "disinformation" spreaders by the Global public-Private Partnerships (G3P)-sponsored industrial censorship complex. This an important concern, since claims that the suppression of free speech's digital reach can "protect public safety" were proved false during COVID-19. A case in point is the censorship of criticism against employee's vaccine mandates. North American employers' mandates are directly linked to excess disabilities and deaths for hundreds and thousands of working-age employees (Dowd, 2024). Deceptive censorship of individuals' reports of vaccine injuries as "malinformation", or automatically-labelling criticism of Operation Warp Speed as "disinformation", would hamper US employee's abilities to make fully-informed decisions on the safety of genetic vaccines. Such deleterious censorship must be critically examined by academics. In contrast, 'Disinformation-for-hire' scholars (Harsin, 2024) will no doubt remain safely ensconced behind their profitable MDM blinkers.

This post is the first in a series that spotlights the myriad of account suppression techniques that exist. For each, examples of censorship against health experts' opinions are provided. Hopefully, readers can then better appreciate the asymmetric struggle that dissidents face when their accounts are targeted by the censorship industrial complex with a myriad of these strategies spanning multiple social media platforms:

Practices for @Account suppression

#1 Deception - users are not alerted to unconstitutional limitations on their free speech

#2 Cyberstalking - facilitating the virtual and physical targeting of dissidents

#3 Othering - enabling public character assassination via cyber smears

#4 Not blocking impersonators or preventing brandjacked accounts

Instead of blanket censorship, I am having YouTube bury all my actual interviews/content with videos that use short, out of context clips from interviews to promote things I would never and have never said. Below is what happens when you search my name on YouTube, every single… pic.twitter.com/xNGrfMMq52

— Whitney Webb (@_whitneywebb) August 12, 2024

Instead of blanket censorship, I am having YouTube bury all my actual interviews/content with videos that use short, out of context clips from interviews to promote things I would never and have never said. Below is what happens when you search my name on YouTube, every single… pic.twitter.com/xNGrfMMq52

— Whitney Webb (@_whitneywebb) August 12, 2024Whether such activities are from intelligence services or cybercriminals, they are very hard for dissidents and/or their representatives to respond effectively against. Popular social media companies (notably META, X and TikTok) seldom respond quickly to scams, or to the digital "repersoning" discussed in a Corbett Report discussion between James Corbett and Whitney Webb.

In Corbett's case, after his account was scrubbed from YouTube, many accounts featuring his identity started cropping up there. In Webb's case, she does not have a public profile outside of X, but these were created featuring her identity on Facebook and YouTube. "Her" channels clipped old interviews she did and edited them into documentaries on material Whitney has never publicly spoken about, such as Bitcoin and CERN. They also misrepresented her views on the transnational power structure behind the COVID-19 event, suggesting she held just Emmanuel Macron and Klaus Schwab responsible for driving it. They used AI thumbnails of her, and superimposed her own words in the interviews. Such content proved popular and became widely reshared via legitimate accounts, pointing to the difficulty of dissidents countering it. She could not get Facebook to take down the accounts, without supplying a government-issued ID to verify her own identity.

Digital platforms may be disinterested in offering genuine support- they may not take any corrective action when following proxy orders from the US Department of State (aka 'jawboning') or members of the Five Eyes (FVEY) intelligence agency. In stark contrast to marginalised dissenters, VIPS in multinationals enjoy access to online threat protection services (such as ZeroFox) for executives that cover brandjacking and over 100 other cybercriminal use-cases.

#5 Filtering an account's visibility through ghostbanning

As the Google Leaks (2019) and Facebook- (2021) and Twitter Files (2022) revelations have spotlighted, social media platforms have numerous algorithmic censorship options, such as the filtering the visibility of users' accounts. Targeted users may be isolated and throttled for breaking "community standards" or government censorship rules. During the COVID-19 event, dissenters' accounts were placed in silos, de-boosted, and also subject to reply de-boosting. Contrarians' accounts were subject to ghostbanning (AKA shadow-banning)- this practice will reduce an account’s visibility or reach secretly, without explicitly notifying its owner. Ghostbanning limits who can see the posts, comments, or interactions. This includes muting replies and excluding targeted accounts' results under trends, hashtags, searches and in followers’ feeds (except where users seek a filtered account's profile directly). Such suppression effectively silences a user's digital voice, whilst he or she continues to post under the illusion of normal activity. Ghostbanning is thus a "stealth censorship" tactic linked to content moderation agendas.

This term gained prominence with the example of the Great Barrington Declaration's authors, Professors Jay Bhattacharya, Martin Kulldorff, and Sunetra Gupta. Published on October 4, 2020, this public statement and proposal flagged grave concerns about the damaging physical and mental health impacts of the dominant COVID-19 policies. It argued that an approach for focused protection should rather be followed than blanket lockdowns, and that allowing controlled spread among low-risk groups would eventually result in herd immunity. Ten days later, a counter- John Snow Memorandum was published in defence of the official COVID-19 narrative's policies. Mainstream media and health authorities amplified it, as did social media given the memorandum's alignment with prevailing platform policies against "misinformation" circa-2020. In contrast, the Great Barrington Declaration was targeted indirectly through platform actions against its proponents and related content:

Stanford Professor of Medicine, Dr Jay Bhattacharya’s Twitter account was revealed (via the 2022 Twitter Files) to have been blacklisted, reducing its visibility. His tweets questioning lockdown efficacy and vaccine mandates were subject to algorithmic suppression. Algorithms could flag his offending content with terms like “Visibility Filtering” (VF) or “Do Not Amplify”, reducing its visibility. For instance, Bhattacharya reported that his tweets about the Declaration and seroprevalence studies (showing wider COVID-19 spread than official numbers suggested) were throttled. Journalist Matt Taibbi's reporting on the "Twitter Files" leaks confirmed that Twitter had blacklisted Prof Bhattacharya's account, limiting its reach due to his contrarian stance. YouTube also removed videos in which he featured, such as interviews in which he criticised lockdown policies.

The epidemiologist and biostatistician, Prof Kulldorff observed that social media censorship stifled opportunities for scientific debate. He experienced direct censorship on multiple platforms, which included shadowbans. Twitter temporarily suspended his account in 2021 for tweeting that not everyone needed the COVID-19 vaccine ('Those with prior natural infection do not need it. Nor children'). Posts on X and web reports indicate Kulldorff was shadowbanned beyond this month-long suspension. The Twitter Files, released in 2022, revealed he was blacklisted, meaning his tweets’ visibility was algorithmically reduced. Twitter suppressed Kulldorff's accurate genetic vaccine critique, preventing comments and likes. Internal Twitter flags like “Trends Blacklisted” or “Search Blacklisted” (leaked during the 2020 Twitter hack) suggest Kulldorff's account was throttled in searches and trends, a hallmark of shadowbanning where reach is curtailed without notification. Algorithmic deamplification excluded Prof Kulldorff's tweets from being seen under trends, search results, or followers’ feeds- except where users sought his profile directly. This reflects how social media companies may apply visibility filters (such as a Not Safe For Work (NSFW) view). Kulldorff also flagged that LinkedIn’s censorship pushed him to platforms like Gab, implying a chilling effect on his professional network presence.

An Oxford University epidemiologist, Professor Gupta faced less overt account-level censorship, but still had to negotiate content suppression. Her interviews and posts on Twitter advocating for herd immunity via natural infection amongst the young and healthy were often flagged, or down-ranked.

#6 Penalising accounts that share COVID-19 "misinformation"

Do share your views by commenting below, or reply to this tweet thread at https://x.com/travisnoakes/status/1906250555564900710.

Friday, 26 July 2024

Content suppression techniques against dissent in the Fifth Estate - examples of COVID-19 censorship on social media

Written for researchers and others interested in the many methods available to suppress dissidents' digital voices. These techniques support contemporary censorship online, posing a digital visibility risk for dissidents challenging orthodox narratives in science.

The Fourth Estate emerged in the eighteenth century as the printing press enabled the rise of an independent press that could help check the power of governments, business, and industry. In similar ways, the internet supports a more independent collectivity of networked individuals, who contribute to a Fifth Estate (Dutton, 2023). This concept acknowledges how a network power shift results from individuals who can search, create, network, collaborate, and leak information in strategic ways. Such affordances can enhance individuals' informational and communicative power vis-à-vis other actors and institutions. A network power shift enables greater democratic accountability, whilst empowering networked agents in their everyday life and work. Digital platforms do enable online content creators to generate and share news that digital publics amplify via networked affordances (such as 💌 likes, " quotes " and sharing via # hashtag communities).

#1 Covering up algorithmic manipulation

Social media users who are not aware about censorship are unlikely to be upset about it (Jansen & Martin, 2015). Social media platforms have not been transparent about how they manipulated their recommender algorithms to provide higher visibility for the official COVID-19 narrative, or in crowding out original contributions from dissenters on social media timelines, and in search results. Such boosting ensured that dissent was seldom seen, or perceived as fringe minority's concern. As Dr Robert Malone tweeted, the computational algorithm-based method now 'supports the objectives of a Large Pharma- captured and politicised global public health enterprise'. Social media algorithms have come to serve a medical propaganda purpose that crafts and guides the 'public perception of scientific truths'. While algorithmic manipulation underpins most of the techniques listed below, it is concealed from social media platform users.

#2 Fact choke versus counter-narratives

An example she tweeted about was the BBC's Trusted New Initiative warning in 2019 about anti-vaxxers gaining traction across the internet, requiring algorithmic intervention to neutralise "anti-vaccine" content. In response, social media platforms were urged to flood users' screens with repetitive pro-(genetic)-vaccine messages normalising these experimental treatments. Simultaneously, messaging attacked alternate treatments that posed a threat to the vaccine agenda. Fact chokes also included 'warning screens' that were displayed before users could click on content flagged by "fact checkers" as "misinformation".

#3 Title-jacking

For the rare dissenting content that can achieve high viewership, another challenge is that title-jackers will leverage this popularity for very different outputs under exactly the same (or very similar) production titles. This makes it less easy for new viewers to find the original work. For example, Liz Crokin's 'Out of the Shadows’ documentary describes how Hollywood and the mainstream media manipulate audiences with propaganda. Since this documentary's release, several videos were published with the same title.

#4 Blacklisting trending dissent

Social media search engines typically allow their users to see what is currently the most popular content. In Twitter, dissenting hashtags and keywords that proved popular enough to feature amongst trending content, were quickly added to a 'trend blacklist' that hid unorthodox viewpoints. Tweets posted by accounts on this blacklist are prevented from trending regardless of how many likes or retweets they receive. On Twitter, Stanford Health Policy professor Jay Bhattacharya argues he was added to this blacklist for tweeting on a focused alternative to the indiscriminate COVID-19 lockdowns that many governments followed. In particular, The Great Barrington Declaration he wrote with Dr. Sunetra Gupta and Dr. Martin Kulldorff, which attracted over 940,000 supporting signatures.#5 Blacklisting content due to dodgy account interactions or external platform links

#6 Making content unlikeable and unsharable

This newsletter from Dr Steven Kirsch's (29.05.2024) described how a Rasmussen Reports video on YouTube had its 'like' button removed. As Figure 1 shows, users could only select a 'dislike' option. This button was restored for www.youtube.com/watch?v=NS_CapegoBA.

Figure 1. Youtube only offers dislike option for Rassmussen Reports video on Vaccine Deaths- sourced from Dr Steven Kirsch's newsletter (29.05.2024)

Social media platforms may also prevent resharing such content, or prohibit links to external websites that are not supported by these platforms' backends, or have been flagged for featuring inappropriate content.

#7 Disabling public commentary

#8 Making content unsearchable within, and across, digital platforms

#9 Rapid content takedowns

Social media companies could ask users to take down content that was in breach of COVID-19 "misinformation" policies, or automatically remove such content without its creators' consent. In 2021, META reported that it had removed more than 12 million pieces of content on COVID-19 and vaccines that global health experts had flagged as misinformation. YouTube has a medical misinformation policy that follows the World Health Organisation (WHO) and local health authorities guidance. In June 2021, YouTube removed a podcast in which the evidence of a reproductive hazard of mRNA shots was discussed between Dr Robert Malone and Steve Kirsch on Prof Bret Weinstein's DarkHorse channel. Teaching material that critiqued genetic vaccine efficacy data was automatically removed within seconds for going against its guidelines (see Shir Raz, Elisha, Martin, Ronnel, Guetzkow, 2022). The WHO reports that its guidance contributed to 850,000 videos related to harmful or misleading COVID-19 misinformation being removed from YouTube between February 2020 and January 2021.#10 Creating memory holes

#11 Rewriting history

#12 Concealing the motives behind censorship, and who its real enforcers are

|

| Figure 2. Global Public-Private Partnership (G3P) stakeholders - sourced from IainDavis.com (2021) article at https://unlimitedhangout.com/2021/12/investigative-reports/the-new-normal-the-civil-society-deception. |

Friday, 23 December 2022

A summary of 'Who is watching the World Health Organisation? ‘Post-truth’ moments beyond infodemic research'

A major criticism this paper raises is that infodemic research lacks earnest discussion on where health authorities’ own choices and guidelines might be contributing to ‘misinformation’, ‘disinformation’ and even ‘malinformation’. Rushed guidance based on weak evidence from international health organisations can perpetuate negative health and other societal outcomes, not ameliorate them! If health authorities’ choices are not up for review and debate, there is a danger that a hidden goal of the World Health Organisation (WHO) infodemic (or related disinfodemic funders’ research) could be to direct attention away from funders' multitude of failures in fighting pandemics with inappropriate guidelines and measures.

In The regime of ‘post-truth’: COVID-19 and the politics of knowledge (at https://www.tandfonline.com/doi/abs/10.1080/01596306.2021.1965544), Kwok, Singh and Heimans (2019) describe how the global health crisis of COVID-19 presents a fertile ground for exploring the complex division of knowledge labour in a ‘post-truth’ era. Kwok et al. (2019) illustrates this by describing COVID-19 knowledge production at university. Our paper focuses on the relationships between health communication, public health policy and recommended medical interventions.

Divisions of knowledge labour are described for (1) the ‘infodemic/disinfodemic research agenda’, (2) ‘mRNA vaccine research’ and (3) ‘personal health responsibility’. We argue for exploring intra- and inter relationships between influential knowledge development fields. In particular, the vaccine manufacturing pharmaceutical companies that drive and promote mRNA knowledge production. Within divisions of knowledge labour (1-3), we identify key inter-group contradictions between the interests of agencies and their contrasting goals. Such conflicts are useful to consider in relation to potential gaps in the WHO’s infodemic research agenda:

For (1), a key contradiction is that infodemic scholars benefit from health authority funding may face difficulties questioning their “scientific” guidance. We flag how the WHO ’s advice for managing COVID-19 departed markedly from a 2019 review of evidence it commissioned (see https://www.ncbi.nlm.nih.gov/pubmed/35444988).

(2)’s division features very different contradictions. Notably, the pivotal role that pharmaceutical companies have in generating vaccine discourse is massively conflicted. Conflict of interest arises in pursuing costly research on novel mRNA vaccines because whether the company producing these therapies will ultimately benefit financially from the future sales of these therapies depends entirely on the published efficacy and safety results from their own research. The division of knowledge labour for (2) mRNA vaccine development should not be considered separately from COVID-19’s in Higher Education or the (1) infodemic research agenda. Multinational pharmaceutical companies direct the research agenda in academia and medical research discourse through the lucrative grants they distribute. Research organisations dependant on external funding for covering budget shortfalls will be more susceptible to the influence of those funders on their research programs.

However, from the perspective of orthodoxy, views that support new paradigms are unverified knowledge (and potentially "misinformation"). Any international health organisation that wishes to be an evaluator must have the scientific expertise for managing this ongoing ‘paradox’, or irresolvable contradiction. Organisations such as the WHO may theoretically be able to convene such knowledge, but their dependency on funding from conflicted parties would normally render them ineligible to perform such a task. This is particularly salient where powerful agents can collaborate across divisions of knowledge labour for establishing an institutional oligarchy. Such hegemonic collaboration can suppress alternative viewpoints that contest and query powerful agents’ interests.

Our article results from collaboration between The Noakes Foundation and PANDA. The authors thank JTSA’s editors for the opportunity to contribute to its special issue, the paper’s critical reviewers for their helpful suggestions and AOSIS for editing and proof-reading the paper.

This is the third publication from The Noakes Foundation’s Academic Free Speech and Digital Voices (AFSDV) project. Do follow me on Twitter or https://www.researchgate.net/project/Academic-Free-Speech-and-Digital-Voices-AFSDV for updates regarding it.

I welcome you sharing constructive comments, below.

orcid.org/0000-0001-9566-8983

orcid.org/0000-0001-9566-8983